P Vs NP |

|

|

In essence, this question is asking whether some calculations that are intrinsically hard (for some technical definition of "hard"), or whether all problems are effectively easy (for some technical definition of "easy".) The Knapsack Problem, for example, is thought to be hard, but no one really knows for sure. It may just be that no one has yet been clever enough to find the "right" way to do it.

Before we can discuss how hard a problem might be, we have to be very precise about what a problem really is. What does it mean to talk about "a problem"? What is "a problem"?

In the context of complexity theory, a "problem" is a collection ( invariably infinite in size) of questions. Each question is called an "instance" of the problem. We can talk about the "size" of the question, and then we can talk about how long it takes to solve questions of different sizes.

To avoid unnecessary complexity here, and to keep this overview simple, we will give three examples of problems, and discuss the definitions in relation to them. The three example problems are:

The question is - how long?

Take multiplication. The "long-multiplication" that used to be taught in school takes a very specific number of operations. If the first number has a digits, and the second number has b digits, then in general the act of multiplying them (using that method) requires a*b (and a bit) "actions".

If the numbers being multiplied are about the same size, then the formula becomes simpler. In general we ignore the smaller terms, and the constant at the front, and simply say that

Technically, the problem is in P. (And yes, that's "P" for "Polynomial")

There are algorithms that work for only special classes of numbers, but the current fastest general factoring method is called the "General number field sieve"

Don't ask.

The formula for its time complexity is horrendous, but you can read more about that here:

There are other, easier to understand methods of factoring, but they all require longer times than this. That's why this one's called "the best" (so far!)

It seems, though, that when we do this we sometimes end up having to try an exponentially large number of possible colours. Not always - some instances of the problem, some specific graphs, are actually quite easy. If there are no edges, for example, or if every cycle is of even length.

However, for every algorithm we've tried so far, there are cases that require an exponential amount of time.

The algorithms we have for 3-Colouring seem to be Exponential in complexity.

The 3-colouring problem seems to be in Exp, although we don't know for sure.

In other words, the task of checking a purported solution is polynomial.

Hmm. Thinks: can't solve, but can check. That leads to an interesting "algorithm":

Now, I'm not going to go into the details of what, in this context, is mean by "non-deterministic", but suffice it to say that if you have a problem for which checking a solution to a question is polynomial-time, that problem is said to be in NP.

|

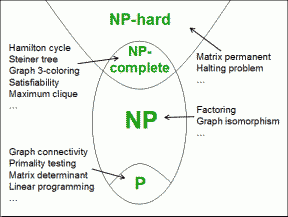

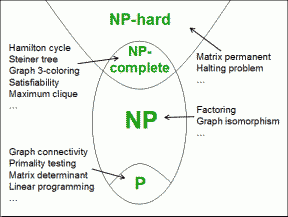

So every problem in P can have a solution checked in polynomial time.

That means every problem in P is also in NP.

We suspect, but don't know for sure, that there are problems in NP that aren't in P.

That is the question P vs NP

NP-Complete -> NPC

Contents |

Links on this page |

|

Suggest a change ( <--

What does this mean?) /

Send me email

Suggest a change ( <--

What does this mean?) /

Send me email|

|

Quotation from Tim Berners-Lee |

|